- NXP Semiconductors N.V.

- NEXT Mobility

- ICT and Industrial

- Smart Factories and Robotics

In recent years, Artificial Intelligence (AI) has rapidly expanded, becoming increasingly integral to all aspects of our lives and work. Applications of AI, such as autonomous driving and text/image generation, are also evolving rapidly, accompanied by a growing trend toward Edge AI, where AI is embedded within devices. This page provides an explanation of Edge AI, using real devices as examples.

- Related Sites

What is Edge AI?

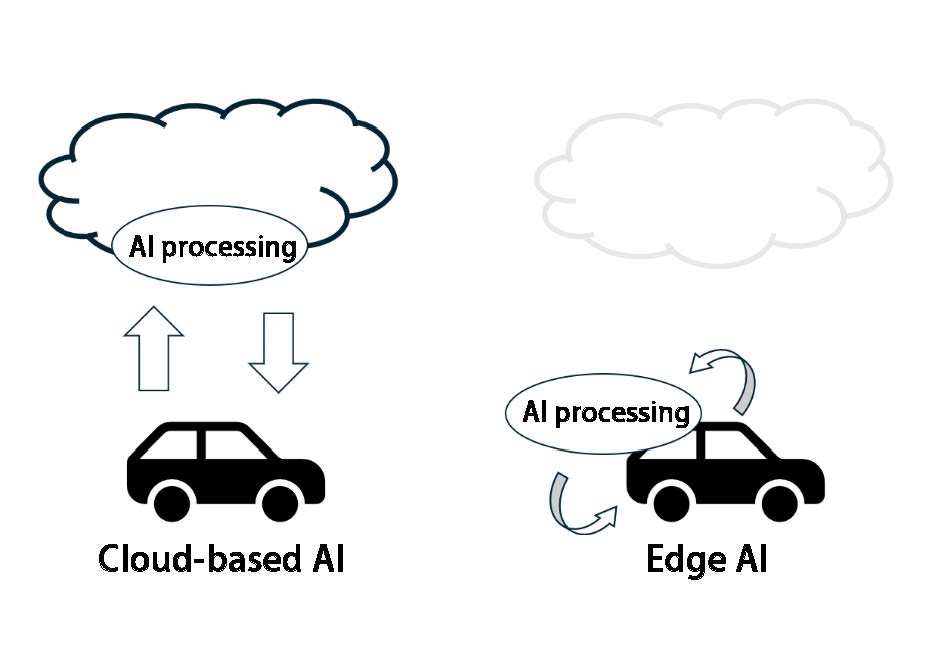

Edge AI is a technology where data processing occurs directly on the device, rather than being sent to the cloud. This significantly enhances real-time performance and efficiency, enabling much faster processing. Traditional cloud-based AI systems often experience delays due to data transmission, which limits their effectiveness for applications requiring immediate responses.

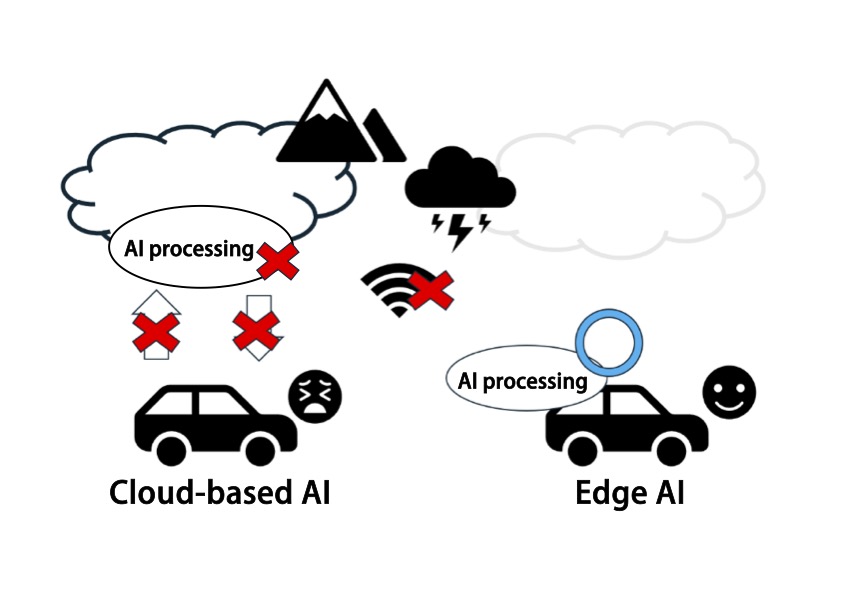

Cloud-Based AI and Edge AI

Another significant advantage of Edge AI is its independence from network connectivity. By processing substantial amounts of data locally instead of transmitting it to the cloud, communication costs can be reduced. It also helps to improve data privacy. This is because processing sensitive information on the device itself, rather than sending it to the cloud, lowers security risks. Additionally, Edge AI is particularly valuable in remote locations or areas with unreliable internet access. The ability to process data locally, even without reliable cloud access, ensures dependable system operation.

Advantages of Edge AI compared to Cloud-Based AI

Edge AI is anticipated to find widespread application across numerous sectors. It is particularly valuable in applications demanding high-speed data processing and immediate responses, such as autonomous vehicles, smart homes, Industrial IoT, and medical devices. In autonomous vehicles, for example, the instantaneous detection of road conditions and obstacles is crucial, making the implementation of Edge AI essential. In this way, Edge AI presents a new paradigm for data processing and is poised to bring about innovative changes in numerous industries.

NXP's Edge AI Solutions

To achieve high-speed processing in the field of Edge AI, NXP offers processors and microcontrollers incorporating Neural Processing Units (NPUs). An NPU is a specialized coprocessor designed for AI inference, capable of rapidly handling extensive computations, thereby significantly enhancing efficiency and overall performance. Below is an introduction to NXP's i.MX 93 application processor and MCX N94x/54x microcontrollers, both of which feature integrated NPUs.

i.MX 93 Application Processor

The i.MX 93 application processor delivers exceptional Edge AI solutions by integrating a Cortex®-A55 processor that operates at up to 1.7 GHz with an Ethos™-U65 NPU.

MCIMX93-EVK

i.MX 93 Application Processor

i.MX 93 Block Diagram

| Multi-core Processing |

|

|---|---|

| Connectivity |

|

| External Memory |

|

| Display Interface |

|

| Audio |

|

| Operating System |

|

| Temperature Range |

|

MCX N94x / 54x Microcontroller

The MCX N94x and N54x microcontrollers provide energy-efficient and high-performance Edge AI solutions by integrating an Arm® Cortex®-M33 processor running at a maximum of 150 MHz and an eIQ® Neutron NPU

FRDM-MCXN947

MCX N94x Block Diagram

| Core Platform |

|

|---|---|

| Memory |

|

| Peripherals (Analog) |

|

| Peripherals (Timer) |

|

| Peripherals (Communication Interfaces) |

|

| Peripherals (Motor Control Subsystem) |

|

| Security |

|

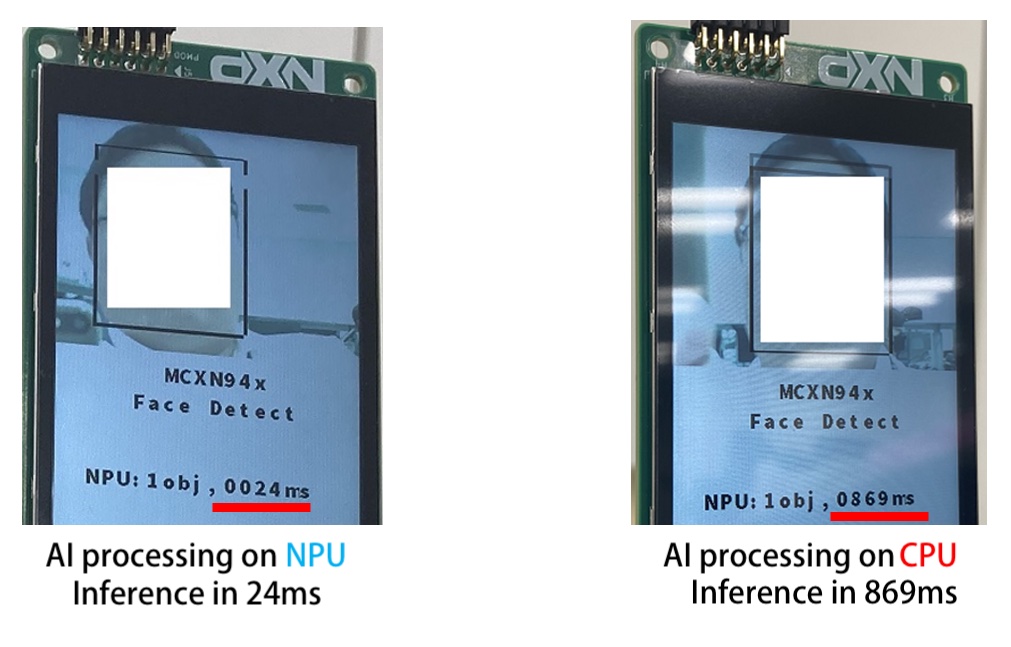

Comparison of AI Processing Speeds Between NPUs and Regular CPUs

Processors equipped with NXP's NPU are able to perform AI processing much faster than regular CPUs. As an example, we measured the speed of face recognition AI processing on the MCX N94x board using both an NPU and a regular CPU.

Results of Comparing Face Recognition AI Processing Speed Between NPU and CPU

This experiment compared the time required for face recognition AI processing using an NPU versus a CPU. The inference time with the NPU was 24 milliseconds, while the CPU took 869 milliseconds. This outcome demonstrates that the NPU performed the AI processing approximately 30 times faster than the CPU. While the specific difference in speed can vary depending on the particular AI model and software specifications used, these results strongly suggest that significant improvements in processing speed can be expected by utilizing an NPU for AI tasks.

Summary

The utilization of AI is expanding beyond the cloud to edge devices, and the integration of NPUs into these devices is driving further advancements in performance. This page has provided an overview of this trend, the relevant devices incorporating NPUs, and their impressive performance metrics. Solutions leveraging NXP devices equipped with NPUs provide the benefits of rapid and efficient AI processing. For details, please check the NXP official website listed on the related sites.

Related Info

Part Information

View part information for the featured products on e-NEXTY, the parts information site operated by NEXTY Electronics.

Inquiry

Related Product Information

In-Depth Guide to NXP's Automotive PMICs (Page 2/2): Fundamentals and Key Features

This article explains the need for PMICs in automotive ECUs and introduces NXP’s automotive PMIC portfolio and key product features.

- NXP Semiconductors N.V.

- NEXT Mobility

In-Depth Guide to NXP's Automotive PMICs (Page 1/2): Fundamentals and Key Features

This article explains the importance of PMICs in automotive ECUs and introduces the features, advantages, and portfolio of NXP’s automotive PMICs. It covers the basics of PMICs and highlights NXP’s offerings.

- NXP Semiconductors N.V.

- NEXT Mobility

An In-Depth Look at the Advantages of NXP's Automotive Microcontroller S32K1

NXP’s S32K1 automotive microcontroller series features ARM Cortex-M0+ and M4F cores, delivering high performance with low power consumption. This article provides a detailed overview of its benefits and features.

- NXP Semiconductors N.V.

- NEXT Mobility

Introduction to NXP's Automotive General-Purpose Microcontroller Products

NXP’s general-purpose automotive microcontrollers—S32K1, S12 MagniV, and S32K3 families—combine high performance, security, and cost efficiency to support the advancement of automotive technologies.

- NXP Semiconductors N.V.

- NEXT Mobility

NXP Automotive Microcontroller S32K311 Evaluation Board by NEXTY Electronics

The S32K311 is NXP's latest automotive microcontroller that offers high performance and advanced features at a low cost in a compact package. This article introduces its key features and our custom S32K evaluation board.

- NXP Semiconductors N.V.

- NEXT Mobility

An In-Depth Look at the Features of NXP's Automotive CAN/LIN Transceiver Products

This article explains the features of NXP’s automotive CAN/LIN transceivers, designed to withstand harsh in-vehicle conditions, for ECU developers looking for reliable in-car network products.

- NXP Semiconductors N.V.

- NEXT Mobility

- ICT and Industrial

Link to Related Technical Columns

Building an i.MX Development Environment (Yocto Edition) | Technical Column

This article explains how to set up a Yocto development environment using NXP’s i.MX series. It covers hardware, preparation steps, and build procedures, helping you prepare to flash images on i.MX evaluation boards.

Building an i.MX Development Environment (uuu Edition) | Technical Column

This is the second installment of our series of articles on how to build an i.MX development environment. In this column, we will show you how to write the files generated by bitbake to an i.MX evaluation board and perform a simple function check.

How to start creating GUI using GUI Guider | Technical Column

As the use of GUIs grows, high development tool costs have become a challenge. NXP’s free tool, GUI Guider, supports a wide range of GUIs for evaluation boards.